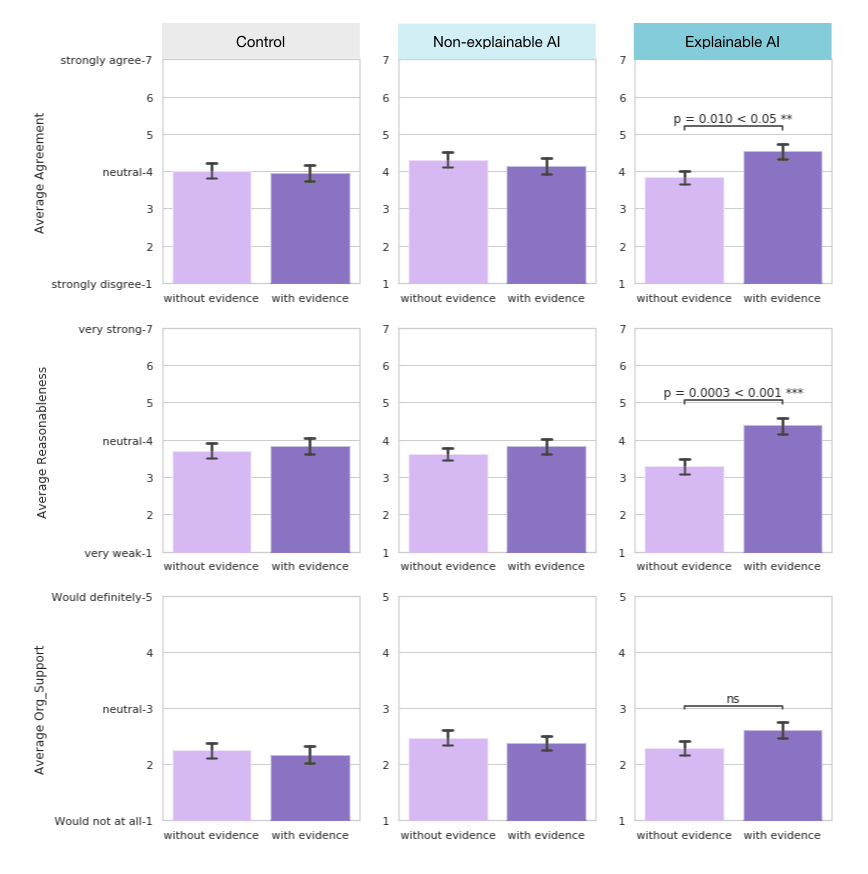

Human judgments and decisions are prone to errors in reasoning caused by factors such as personal biases and external misinformation. We explore the possibility of enhanced reasoning by implementing a wearable AI system as a human symbiotic counterpart. We present "Wearable Reasoner," a proof-of-concept wearable system capable of analyzing if an argument is stated with supporting evidence or not. We explore the impact of argumentation mining and explainability of the AI feedback on the user through an experimental study of verbal statement evaluation tasks. The results demonstrate that the device with explainable feedback is effective in enhancing rationality by helping users differentiate between statements supported by evidence and without. When assisted by an AI system with explainable feedback, users significantly consider claims supported by evidence more reasonable and agree more with them compared to those without. Qualitative interviews demonstrate users' internal processes of reflection and integration of the new information in their judgment and decision making, emphasizing improved evaluation of presented arguments.

TIME: September 2019 ~ March 2020

AUTHORS: Valdemar Danry, Pat Pataranutaporn, Yaoli Mao, Pattie Maes

KEYWORDS: Assisted Reasoning, Cognitive Enhancement, Augmented Humans, Argumentation Mining, Artificial Intelligence.

SKILL: Machine Learning, Natural Language Processing, iOS Development, Digital Prototyping

Publication:Valdemar Danry, Pat Pataranutaporn, Yaoli Mao, and Pattie Maes. 2020.Wearable Reasoner: Towards Enhanced Human Rationality Through A Wear-able Device With An Explainable AI Assistant. In AHs ’20: Augmented Humans International Conference (AHs ’20), March 16–17, 2020, Kaiserslautern,Germany.ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3384657.3384799

AUTHORS: Valdemar Danry, Pat Pataranutaporn, Yaoli Mao, Pattie Maes

KEYWORDS: Assisted Reasoning, Cognitive Enhancement, Augmented Humans, Argumentation Mining, Artificial Intelligence.

SKILL: Machine Learning, Natural Language Processing, iOS Development, Digital Prototyping

Publication:Valdemar Danry, Pat Pataranutaporn, Yaoli Mao, and Pattie Maes. 2020.Wearable Reasoner: Towards Enhanced Human Rationality Through A Wear-able Device With An Explainable AI Assistant. In AHs ’20: Augmented Humans International Conference (AHs ’20), March 16–17, 2020, Kaiserslautern,Germany.ACM, New York, NY, USA, 12 pages. https://doi.org/10.1145/3384657.3384799

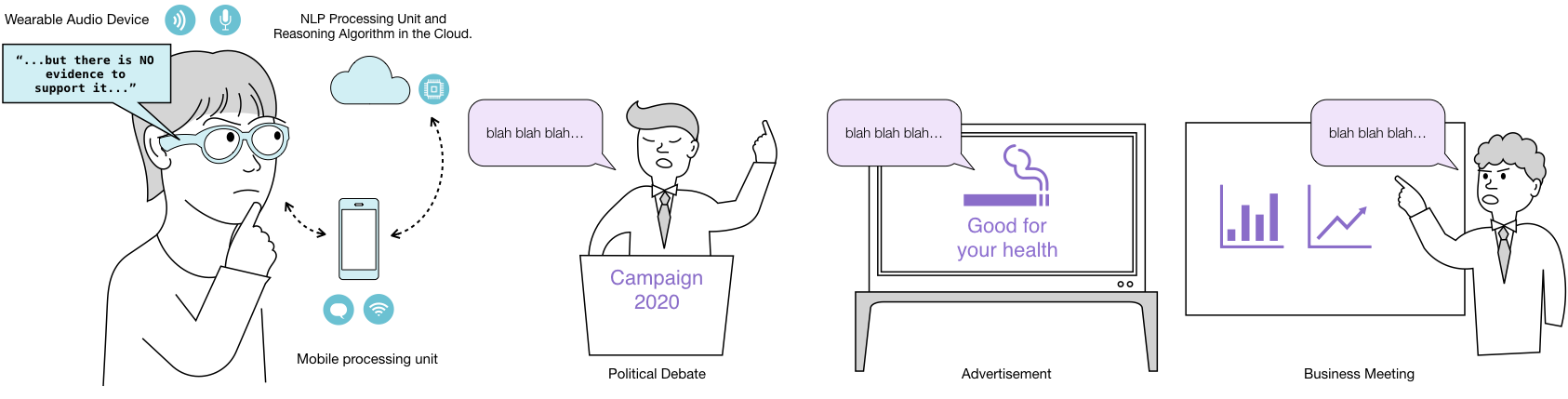

Left: Overview of Wearable Reasoner system. Right: Envisioned use cases of Wearable Reasoner for detecting empty claims (arguments presented without evidence) in political speech, advertisements, or high stake meetings. Credit: Fluid Interfaces

Based on recent advances in artificial intelligence (AI), argument mining, and computational linguistics, we envision the possibility of having an AI assistant as a symbiotic counterpart to the biological human brain. As a "second brain," the AI serves as an extended, rational reasoning organ that assists the individual and can teach them to become more rational over time by making them aware of biased and fallacious information through just-in-time feedback. To ensure the transparency of the AI system, and prevent it from becoming an AI "black box,'' it is important for the AI to be able to explain how it generates its classifications. This Explainable AI additionally allows the person to speculate, internalize and learn from the AI system, and prevents an over-reliance on the technology.

![]()

Our vision for Human + Machine. Credit:

Fluid Interfaces

In order to explore how different types of real-time, AI-based feedback might enhance the user's reasoning in argument based judgment and decision making tasks, we present a prototype device; "Wearable Reasoner,'' a wearable system capable of identifying whether an argument is stated with evidence or not. We conducted a closed environment experimental study where we compared two types of interventions on user judgement and decision making: Explainable AI versus Non-Explainable AI through a device capable of telling the user if an argument is stated with evidence or without.

System Architecture of Wearable Reasoner. Credit: Fluid Interfaces

Our findings demonstrate that our prototype with Explainable AI feedback has a significant effect on users' level of agreement as well as on how reasonable they find the presented arguments to be. When assisted with such feedback, users tend to agree more with claims supported by evidence and consider them more reasonable compared to those without. In qualitative interviews users report their internal processes of speculating and integrating the information from the AI system in their own judgment and decision making, resulting in improved evaluation of presented arguments.