RESEARCH

Systems for Human Reasoning

The pursuit of knowledge and understanding has been a driving force for humanity since the beginning of time, enabling us to unlock secrets of the cosmos, develop innovative technologies, and address complex global challenges. However, despite our cognitive leaps, we still grapple with the limitations of our rationality, biases, and emotions, especially in today's increasingly complex and information-saturated world. This research investigates the potential for reasoning enhancing AI-systems, methods and their impact on human decision-making.

Projects

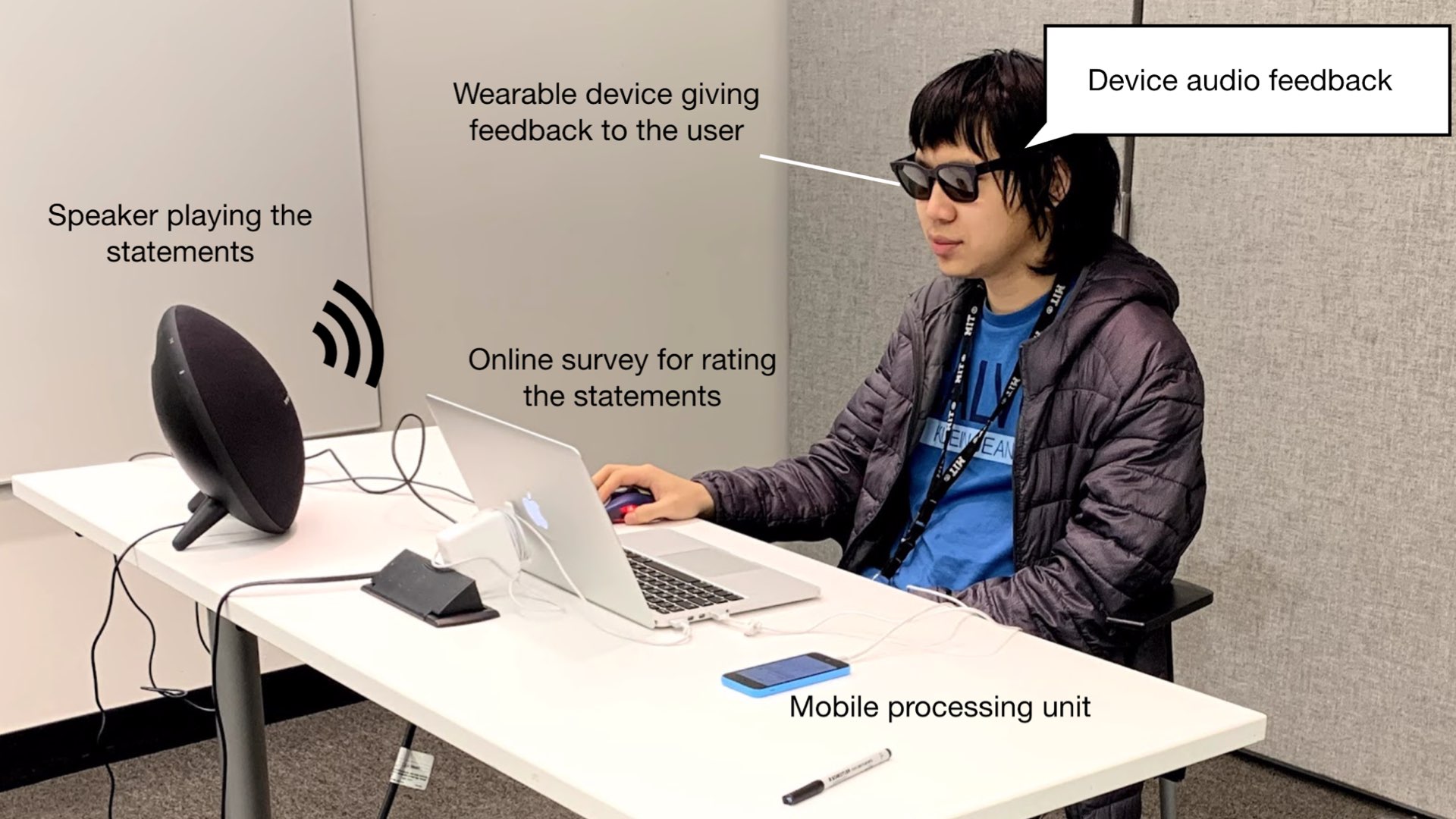

Wearable Reasoner:

Towards Enhanced Human Rationality

A wearable system capable of analyzing if an argument is stated with supporting reasons or not to prompt people to question and reflect on the justifications of their own beliefs and the arguments of others. Read more.

Keywords:

Assisted Reasoning, Cognitive Enhancement, Augmented Humans, Argumentation Mining, Natural Language Processing.

Publication:

ACM Augmented Humans 2020.

Exhibition:

MIT Museum: AI Mind The Gap.

Press:

Hackster.io

Soylent News

Role:

Initiator and concept-creator, developer of machine learning model, conductor of experimental study, lead on write-up.

AI Systems that Frames Explanations as Questions

This project presents the idea of AI-explanations that reframe information as questions to actively engage people's thinking and scaffold their thinking. In a study with 210 subjects, we compared the effects of AI systems that ask questions to systems telling people answers and found that AI-framed questioning significantly increased human discernment accuracy of logically flawed information. Read more.

Keywords:

Human-AI Interaction, Assisted Reasoning, Cognitive Enhancement, Augmented Humans, Argumentation Mining, Natural Language Processing.

Publication:

ACM CHI 2023.

Press:

”A chatbot that asks questions could help you spot when it makes no sense”, MIT Technology Review

Role:

Initiator and concept-creator, developer of machine learning model, conductor of experimental study, lead on write-up.

Deceptive AI Explanations Can Amplify Beliefs in Misinformation

We explore the impact of deceptive AI generated explanations on misinformation and find that people more easily believe false information when an AI system gives them deceptive explanations for why it is true.

Keywords:

Human-AI Interaction, Assisted Reasoning, Misinformation, Computational Social Science.

Publication:

Under review (Preprint).

Role:

Initiator and concept-creator, developer of machine learning model, designing experimental study, conductor of experimental study, lead on write-up.

MindMapper:

A Wearable AI System That Learns About A User To Mitigate Their Biases

A wearable system that uses an LLM-based AI agent over time to learn about a users behaviors and in real-time alert them when they might be vulnerable from their personal biases.

Keywords:

AI Agents, Large language models, Reasoning Augmentation.

Publication:

Work in progress