Do Cyborgs Dream of Experiential Brains?

Prototyping Technology across Consciousness.

1.1 Experiential Reasoning

1.2 Experiential Identities

1.3 Experiential Environments

Advancing Experiential Factors in Human-Computer Interaction across consciousness can allow for novel applications and more natural interactions with technology. In the following I will present three potential areas of research interest: (i) Experiential Intelligence, (ii) Experiential Identities, and (iii) Experiential Environments.

1.0 The Future of Computing is Experiential

With the expansion of intelligent and ubiquitous computers everywhere, we have in many ways become cognitive cyborgs: most of us are inseparable from our personal devices and increasingly rely on them in our thinking, memorization, learning, communication, etc. However, while these devices significantly improve the functional capabilities and performance of our brain and body, they are still not experienced similarly to the phenomenology of processes in our brain and body, that is, by being felt a certain way. Decision making, for instance, is not just about arriving at a conclusion through systematic thinking; it involves among other things “intuitions”, “gut-feelings”, and different degrees of conscious awareness and control. Thus, if humans are to become cyborgs as M. Clynes and NS. Kline originally proposed in 1956, then it is merely not enough to augment the functionality of human cognitive and perceptual systems: we also need to consider the natural phenomenology of the human body, thoughts and world. As we integrate with technology, we would want to feel that we are the ones thinking, feeling, expressing, and experiencing reality, NOT the technology. With the newly-found focus on consciousness and phenomenology within experimental science, I believe there are now ample opportunities in applying such research with technology. In my research, I envision and build technologies that initiate, modulate and play with human consciousness and phenomenology to positively alter human experience, augment our cognition, and provide us with new tools for emotional communication and understanding.

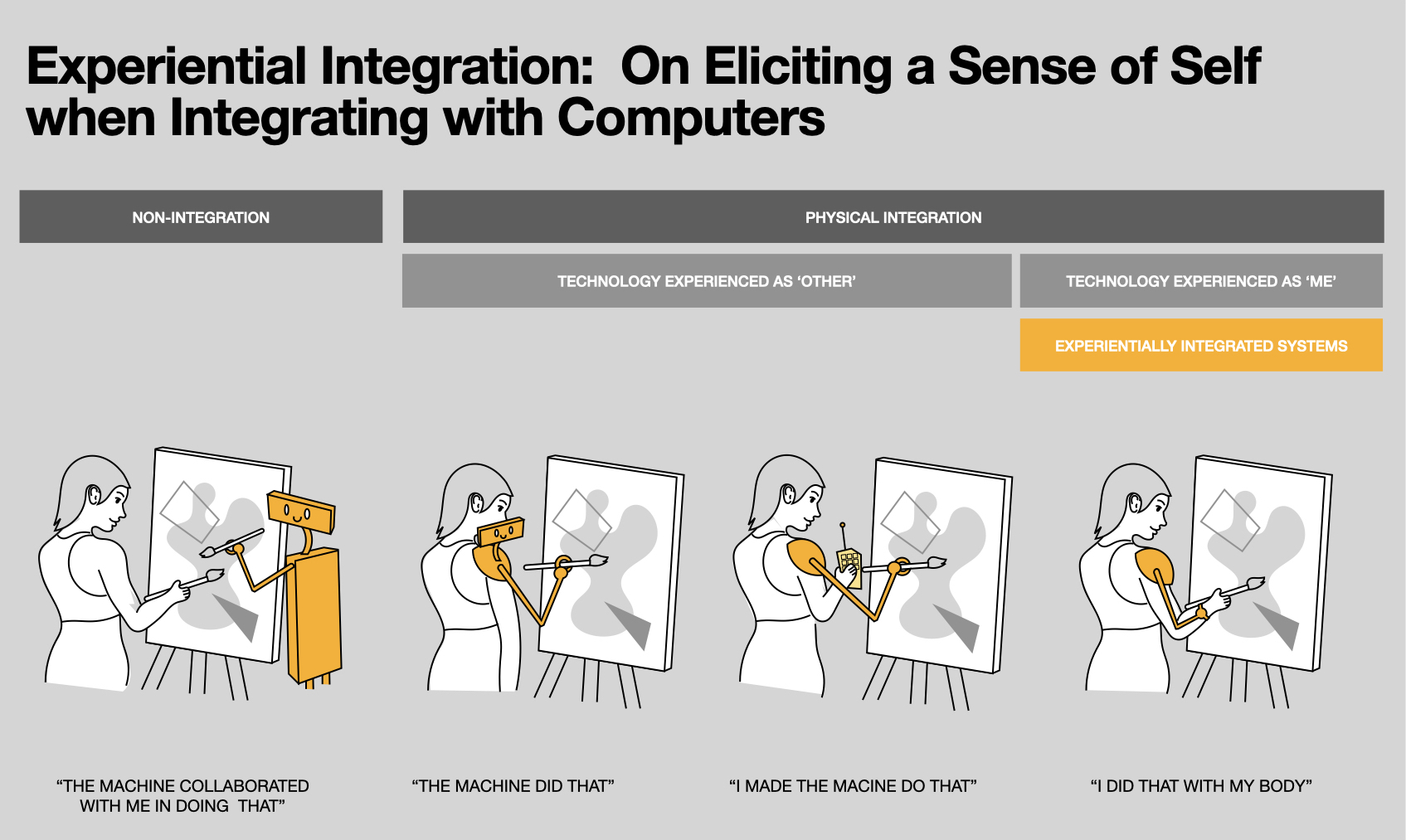

In collaboration with PhD student at the MIT Media Lab, Pat Pataranutaporn, Assistant Professor at Georgia Tech, Sang-won Leigh, and Professor Pattie Maes at MIT Media Lab I have been working on articulating these thoughts and their design implications in a paper currently under review for CHI2021. In the paper, we present the concept of experiential integration, that is, how one can design technology that might feel as part of the user based on neurocognitive and phenomenological research. These concepts include the sense of body-ownership, sense of agency, self-conceptualization, implicit and explicit awareness, as well as the respective contributions of central motor signals and peripheral afferent signals to these varieties of body experience.

Additionally, I am co-organizing a workshop for CHI2021 with leading researchers within the field of Human-Computer Integration such as Dag Svanæs, Florian “Floyd” Mueller, and Pedro Lopes. The aim of the workshop is to further discuss the idea of experiential integration and clarify the theoretical landscape and arrive at concrete implications for design and evaluation. The workshop includes activities of hands-on experiments with novel body-illusions, development of an experiential integration cookbook, and instructor-guided sessions on how to design and evaluate experiential integration. Lastly, the workshop revisits existing neurocognitive, phenomenological and Human-Computer Integration research to identify and articulate gaps in theoretical and methodological approaches, in particular the experiential layer of designing Human-Computer Integrated systems.

In the future I imagine that a greater interplay between technology and conscious structures will promote ample opportunities for deeper augmentations and affective technology that feel like extensions of our natural thinking, self-perception and sense of being in a meaningful world. My research proposes to build novel technologies that do this based on empirical evidence from neurocognitive science, phenomenology and consciousness research (e.g. correlative physiological bottom-up processes like motor signals and afferent signals) with tools like machine learning, brain-imaging and brain stimulation techniques. In the following I will present three potential areas of research interest: (i) Experiential Intelligence, (ii) Experiential Identity, and (iii) Experiential Environments. These three areas tie into the work of three unique labs whose visions and work I greatly admire.

In collaboration with PhD student at the MIT Media Lab, Pat Pataranutaporn, Assistant Professor at Georgia Tech, Sang-won Leigh, and Professor Pattie Maes at MIT Media Lab I have been working on articulating these thoughts and their design implications in a paper currently under review for CHI2021. In the paper, we present the concept of experiential integration, that is, how one can design technology that might feel as part of the user based on neurocognitive and phenomenological research. These concepts include the sense of body-ownership, sense of agency, self-conceptualization, implicit and explicit awareness, as well as the respective contributions of central motor signals and peripheral afferent signals to these varieties of body experience.

Additionally, I am co-organizing a workshop for CHI2021 with leading researchers within the field of Human-Computer Integration such as Dag Svanæs, Florian “Floyd” Mueller, and Pedro Lopes. The aim of the workshop is to further discuss the idea of experiential integration and clarify the theoretical landscape and arrive at concrete implications for design and evaluation. The workshop includes activities of hands-on experiments with novel body-illusions, development of an experiential integration cookbook, and instructor-guided sessions on how to design and evaluate experiential integration. Lastly, the workshop revisits existing neurocognitive, phenomenological and Human-Computer Integration research to identify and articulate gaps in theoretical and methodological approaches, in particular the experiential layer of designing Human-Computer Integrated systems.

In the future I imagine that a greater interplay between technology and conscious structures will promote ample opportunities for deeper augmentations and affective technology that feel like extensions of our natural thinking, self-perception and sense of being in a meaningful world. My research proposes to build novel technologies that do this based on empirical evidence from neurocognitive science, phenomenology and consciousness research (e.g. correlative physiological bottom-up processes like motor signals and afferent signals) with tools like machine learning, brain-imaging and brain stimulation techniques. In the following I will present three potential areas of research interest: (i) Experiential Intelligence, (ii) Experiential Identity, and (iii) Experiential Environments. These three areas tie into the work of three unique labs whose visions and work I greatly admire.

Related Work

1.1 Experiential Reasoning:

With significant increases in fake and fallacious information in our daily lives, supporting individuals in reasoning is now more critical than ever.

According to renowned scientists like A. Damasio, J. Haidt, and M. Lieberman feelings like “intuitions” and “gut-feelings” are incredibly essential to how we reason and make decisions. Contrary to popular beliefs, humans are rarely rational and cautious thinkers. Instead, they rely on their intuitions to make sense of their own state, the world and others (i.e. sensations of affective valence like good-bad/like-dislike). While these shortcuts act as efficient rules of thumbs allowing people to make decisions quickly without explicit conscious reflection, they are often prone to errors caused by factors such as personal biases and limited cognitive resources - making our decision vulnerable to rhetorical manipulation, poor argumentation, and fake news. These shortcomings in reasoning affect not just our analytical thinking, that is, our capacity to logically assess and evaluate argumentation - but also our social skills such as understanding and communication of emotions. These shortcomings are especially problematic for populations with cognitive impairments and deficiencies like individuals with autism spectrum disorder, PTSD, or social anxiety who struggle with not just analytical thinking but also to pick up and respond appropriately to social cues, indirect language, and emotions.

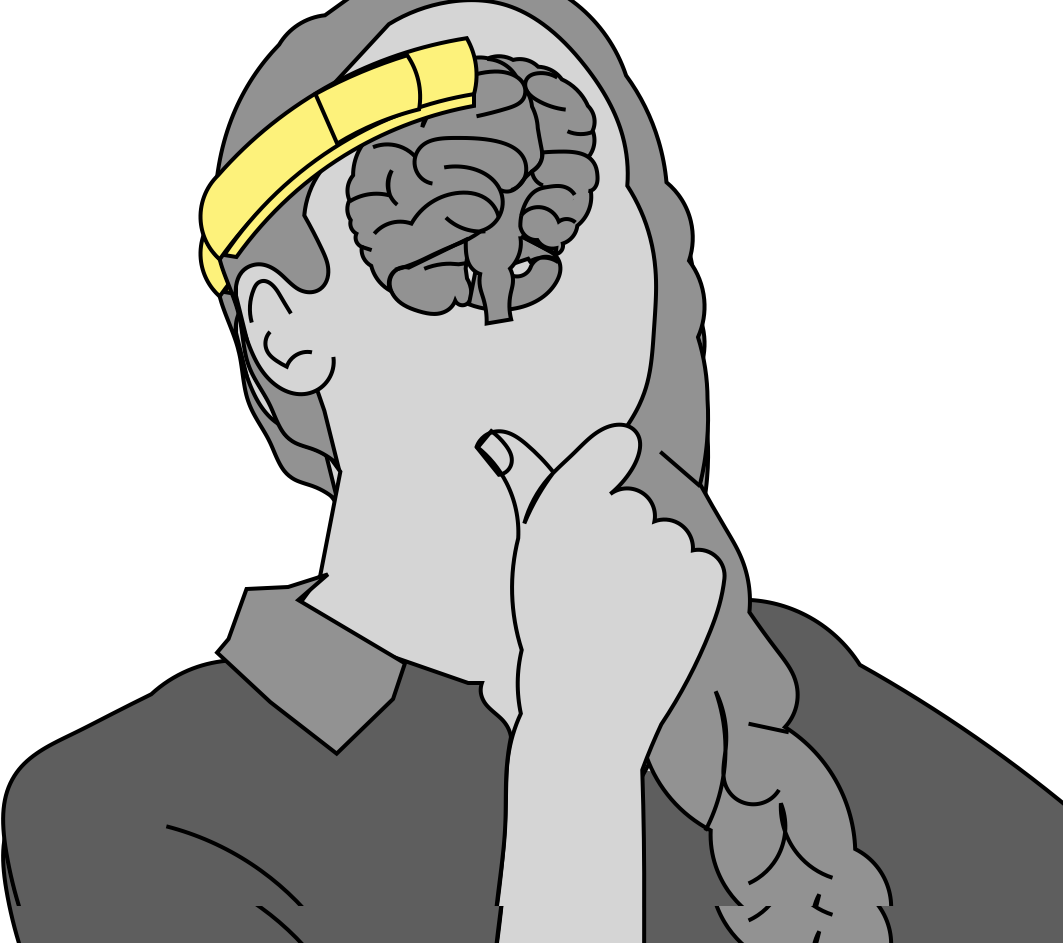

In order to support people in being more resilient to manipulative, false or ambiguous information, I believe augmenting the human reasoning system with an intelligent “organ” or “second brain” can enable more robust information processing and literacy. To demonstrate my vision, together with Fluid Interfaces PhD student Pat Pataranutaporn I initiated a research project on building wearable human-AI co-reasoning systems for enhancing human rationality and decision making. Inspired by concepts from philosophy of language and formal logic, I built a prototype "Wearable Reasoner" that utilizes explainable AI and natural language processing techniques such as argumentation mining to perform real-time analysis of logical structures and linguistic patterns in speech. From evaluating the system on 18 participants, results showed that our system with explainable feedback was effective in helping users process and differentiate logical information from illogical information (in our study limited to “bare-assertion fallacies”). Thus, enabling users to access logical structures of information and ‘why’ it could be considered false. These findings were published in a top-tier publication of the ACM (Association for Computing Machinery), namely the annual Augmented Humans conference, and it will be included as part of a new exhibit on AI at the MIT museum in 2021. These results open up for deeper investigations into the potential of human-AI co-reasoning systems.

In future I envision human-AI reasoning systems to be deeper integrated with conscious structures such as implicit and explicit awareness in reasoning, e.g. “intuitions” and “controlled thinking”, and how they might be artificially invoked with haptic, auditory, or visual feedback, or through non-invasive brain stimulation techniques. Further, implementing a knowledge graph-based machine learning architecture like Logical Neural Networks (LNNs) could enable more precise classification and more accessible explanations of logical structures and shortcomings with, for instance, analogies.

Additionally, I believe there is a great potential for social reasoning systems. For instance, my little brother is diagnosed with Aspergers and often finds it incredibly challenging to understand and interpret implicit cues and intent in conversations correctly, which makes social interactions very difficult for him. I believe training a natural language processing machine learning model on concepts from speech act theory could enable people like him to more effectively discern between different statement objectives and intentions.

According to renowned scientists like A. Damasio, J. Haidt, and M. Lieberman feelings like “intuitions” and “gut-feelings” are incredibly essential to how we reason and make decisions. Contrary to popular beliefs, humans are rarely rational and cautious thinkers. Instead, they rely on their intuitions to make sense of their own state, the world and others (i.e. sensations of affective valence like good-bad/like-dislike). While these shortcuts act as efficient rules of thumbs allowing people to make decisions quickly without explicit conscious reflection, they are often prone to errors caused by factors such as personal biases and limited cognitive resources - making our decision vulnerable to rhetorical manipulation, poor argumentation, and fake news. These shortcomings in reasoning affect not just our analytical thinking, that is, our capacity to logically assess and evaluate argumentation - but also our social skills such as understanding and communication of emotions. These shortcomings are especially problematic for populations with cognitive impairments and deficiencies like individuals with autism spectrum disorder, PTSD, or social anxiety who struggle with not just analytical thinking but also to pick up and respond appropriately to social cues, indirect language, and emotions.

In order to support people in being more resilient to manipulative, false or ambiguous information, I believe augmenting the human reasoning system with an intelligent “organ” or “second brain” can enable more robust information processing and literacy. To demonstrate my vision, together with Fluid Interfaces PhD student Pat Pataranutaporn I initiated a research project on building wearable human-AI co-reasoning systems for enhancing human rationality and decision making. Inspired by concepts from philosophy of language and formal logic, I built a prototype "Wearable Reasoner" that utilizes explainable AI and natural language processing techniques such as argumentation mining to perform real-time analysis of logical structures and linguistic patterns in speech. From evaluating the system on 18 participants, results showed that our system with explainable feedback was effective in helping users process and differentiate logical information from illogical information (in our study limited to “bare-assertion fallacies”). Thus, enabling users to access logical structures of information and ‘why’ it could be considered false. These findings were published in a top-tier publication of the ACM (Association for Computing Machinery), namely the annual Augmented Humans conference, and it will be included as part of a new exhibit on AI at the MIT museum in 2021. These results open up for deeper investigations into the potential of human-AI co-reasoning systems.

In future I envision human-AI reasoning systems to be deeper integrated with conscious structures such as implicit and explicit awareness in reasoning, e.g. “intuitions” and “controlled thinking”, and how they might be artificially invoked with haptic, auditory, or visual feedback, or through non-invasive brain stimulation techniques. Further, implementing a knowledge graph-based machine learning architecture like Logical Neural Networks (LNNs) could enable more precise classification and more accessible explanations of logical structures and shortcomings with, for instance, analogies.

Additionally, I believe there is a great potential for social reasoning systems. For instance, my little brother is diagnosed with Aspergers and often finds it incredibly challenging to understand and interpret implicit cues and intent in conversations correctly, which makes social interactions very difficult for him. I believe training a natural language processing machine learning model on concepts from speech act theory could enable people like him to more effectively discern between different statement objectives and intentions.

Related Work

1.2 Experiential Identities:

With increasing digitalization of our lives, it seems that the way we perceive and characterize ourselves in the future will no longer be limited to our biological appearance. Instead, we will experience our self-concept as fluid, allowing us to transition between multiple bodies, avatars, or appearances much like we change our clothes. Together with new advancements in generative AI, it is now also possible to create highly realistic and interactive versions of real people like historical figures, celebrities, politicians and so on. Research on embodiment in virtual reality has shown self-perception to top-down influence various cognitive processes like intelligence, motor skills, and emotion. In the future, I believe the experience of embodying or replicating various identities will be greatly utilized to promote and develop our cognitive skills and mental health.

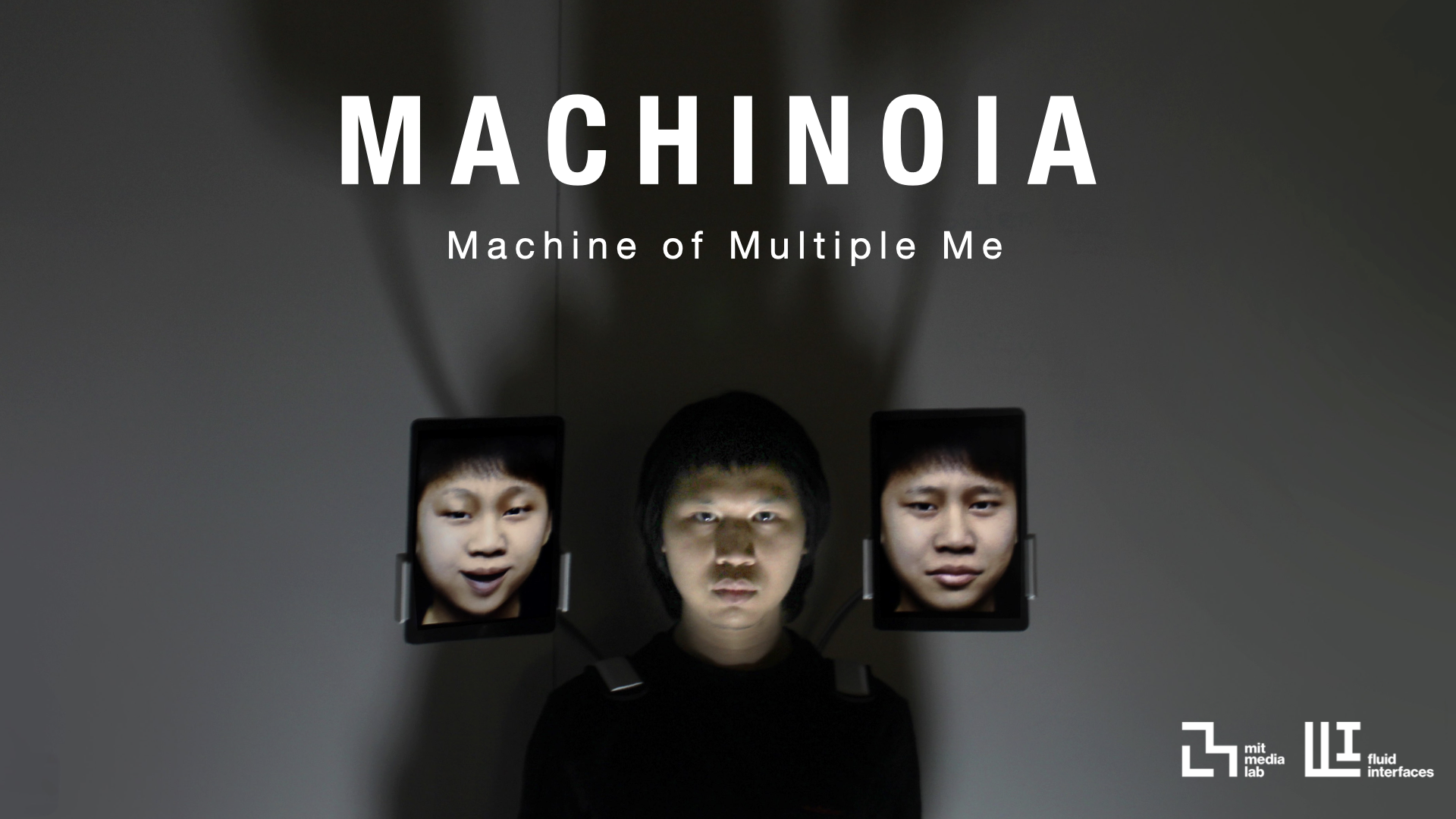

As an exploration of these ideas, I have co-authored a speculative art installation titled “Machinoia: Machine of Multiple Me” submitted to SIGGRAPH Asia 2020 that uses generative adversarial networks (GANs) and attitude extraction algorithms to let you embody multiple versions of yourself. When wearing the installation, the user is able to consult past or future selves extended from their back as additional heads. I believe such a system can have a great potential for enhancing our introspective capabilities by enabling us to remember our earlier motivations and attitudes and get perspectives on the future. In 2019 the installation was awarded an MIT Council for the Arts Directors Grant.

Additionally, embodying the identities of others could be equally impactful for improving our cognitive skills and behavioral health. For instance, what if teachers could teach their students as Albert Einstein, Marie Curie, or Abraham Lincoln? Or what if therapists could assume the identities of deceased family members, trauma inducers, or hallucinated personalities in schizophrenia? In a paper currently under review for “Nature: Machine Intelligence” co-authored with researchers from the MIT Media Lab and the University of California Santa Barbara, I discuss these potential applications of generative AI in education and healthcare, as well as present a working pipeline for easy implementation for teachers and researchers. These discussions reveal great promise for future research.

Another promising research area is taking an interactive snapshot of the identities of others: Like photographs today enable us to keep some part of our loved ones near when they are gone in death or distance, I believe the future will let us save AI replications of friends and family members from specific times in our lives as interactive photographs that you can talk to. This could be especially impactful for astronauts on future space exploration missions as they will be away from their friends and family for longer periods without proper real-time communication methods. In a NASA grant proposal that I have submitted with the MIT Media Lab and University of California Santa Barbara, we are proposing to build interactive AI representations of friends, family members, and partners that astronauts can bring along on long-duration deep space missions to promote their behavioral health and emotional well-being. Our proposal is currently in the finals.

As an exploration of these ideas, I have co-authored a speculative art installation titled “Machinoia: Machine of Multiple Me” submitted to SIGGRAPH Asia 2020 that uses generative adversarial networks (GANs) and attitude extraction algorithms to let you embody multiple versions of yourself. When wearing the installation, the user is able to consult past or future selves extended from their back as additional heads. I believe such a system can have a great potential for enhancing our introspective capabilities by enabling us to remember our earlier motivations and attitudes and get perspectives on the future. In 2019 the installation was awarded an MIT Council for the Arts Directors Grant.

Additionally, embodying the identities of others could be equally impactful for improving our cognitive skills and behavioral health. For instance, what if teachers could teach their students as Albert Einstein, Marie Curie, or Abraham Lincoln? Or what if therapists could assume the identities of deceased family members, trauma inducers, or hallucinated personalities in schizophrenia? In a paper currently under review for “Nature: Machine Intelligence” co-authored with researchers from the MIT Media Lab and the University of California Santa Barbara, I discuss these potential applications of generative AI in education and healthcare, as well as present a working pipeline for easy implementation for teachers and researchers. These discussions reveal great promise for future research.

Another promising research area is taking an interactive snapshot of the identities of others: Like photographs today enable us to keep some part of our loved ones near when they are gone in death or distance, I believe the future will let us save AI replications of friends and family members from specific times in our lives as interactive photographs that you can talk to. This could be especially impactful for astronauts on future space exploration missions as they will be away from their friends and family for longer periods without proper real-time communication methods. In a NASA grant proposal that I have submitted with the MIT Media Lab and University of California Santa Barbara, we are proposing to build interactive AI representations of friends, family members, and partners that astronauts can bring along on long-duration deep space missions to promote their behavioral health and emotional well-being. Our proposal is currently in the finals.

Related Work

1.3 Experiential Environments:

It is no understatement that the ways we experience the world play a massive role in our thinking, actions, and emotional well-being. New waves in cognitive science suggest that cognition and conscious phenomena do not just reside in the brain but are instead intricately interwoven with the body in its relation to environmental affordances. This can be observed in various mental and experiential disorders like dementia, PTSD, and schizophrenia. In dementia the world has become unfamiliar, in PTSD everyday objects and persons appear threatening, and in schizophrenia self and world-phenomena are distorted. The experiential aspects of these disorders have in particular been identified as distortions of implicit structures of consciousness. With advancements in wearable and sensor technology it is now possible to interfere with and modify these conscious world- and self-phenomena by stimulating the body, brain and its senses in various ways. I believe that the future involves technology that directly feeds into and alters these structures to assist and provide doctors with tools to treat individuals with such deficiencies.

At Harvard Innovation Labs and in collaboration with schizophrenia and consciousness researchers at the University of Copenhagen, I co-founded a venture to develop virtual reality based tools that assist doctors and researchers with understanding and early-on identifying non-psychotic self and world distortions in patients with schizophrenia. The tools consist of using subtle body and world alterations in virtual reality to visualize specific sub-experiential schizotypy like a diminished sense of agency over body-movements, abnormal intensity or persistence of visual perceptions, disturbances in perceptual integrity or organization of objects or scenes, feeling as though strangers are unusually focused on oneself, and so on.

Further, I also believe that the ability to take patients with environmentally conditioned disorders like dementia, PTSD, and various kinds of anxiety to different environments can be a significant treatment tool for therapists and doctors, e.g. as exposure therapy. For instance, what if therapists could take patients back in time to their childhood homes? Or what if they could take patients suffering with PTSD back to the place where trauma was induced and help them cope? Taking individuals back in time to live old “phenomenological spaces” and even having them altered, could be impactful in treatment. I currently have a working prototype pipeline that can reconstruct environments like one’s childhood home from old video recordings, allowing patients to travel back in time to a “frozen in time” location through virtual reality. I would be very interested in continuing this research on experiential environments.

At Harvard Innovation Labs and in collaboration with schizophrenia and consciousness researchers at the University of Copenhagen, I co-founded a venture to develop virtual reality based tools that assist doctors and researchers with understanding and early-on identifying non-psychotic self and world distortions in patients with schizophrenia. The tools consist of using subtle body and world alterations in virtual reality to visualize specific sub-experiential schizotypy like a diminished sense of agency over body-movements, abnormal intensity or persistence of visual perceptions, disturbances in perceptual integrity or organization of objects or scenes, feeling as though strangers are unusually focused on oneself, and so on.

Further, I also believe that the ability to take patients with environmentally conditioned disorders like dementia, PTSD, and various kinds of anxiety to different environments can be a significant treatment tool for therapists and doctors, e.g. as exposure therapy. For instance, what if therapists could take patients back in time to their childhood homes? Or what if they could take patients suffering with PTSD back to the place where trauma was induced and help them cope? Taking individuals back in time to live old “phenomenological spaces” and even having them altered, could be impactful in treatment. I currently have a working prototype pipeline that can reconstruct environments like one’s childhood home from old video recordings, allowing patients to travel back in time to a “frozen in time” location through virtual reality. I would be very interested in continuing this research on experiential environments.

Related Work

Conclusion

In this statement of objectives I presented many ideas: both ideas that I have already worked on, want to continue working on, and ideas that inspire my future research interests. The common element that ties all these ideas together is my motivation to utilize experiential structures and phenomena as design materials for novel cognitive, perceptual and affective technology that feel like extensions of our natural thinking, self-perception and sense of being in a meaningful world. My proposed research attempts to bridges the gap between the computations of the cognitive mind and the experiences of the conscious mind. This will enable a future where computers are not only capable of improving our individual skills and performance but fundamentally change how we feel, perceive and interact with computers, the world, and each other.